OpenCL gone wild

One of my favorite parts of programming is that there's constantly new tools coming out that make programming more interesting. One recent development I've stumbled upon is OpenCL, a specification that lets you design code for execution on a GPU or other parallel processor. I've been in desperate need for some additional speed in one of my recent projects, so I decided to give OpenCL a shot. If you're curious about the tools that I used, check out the last paragraph of the article.

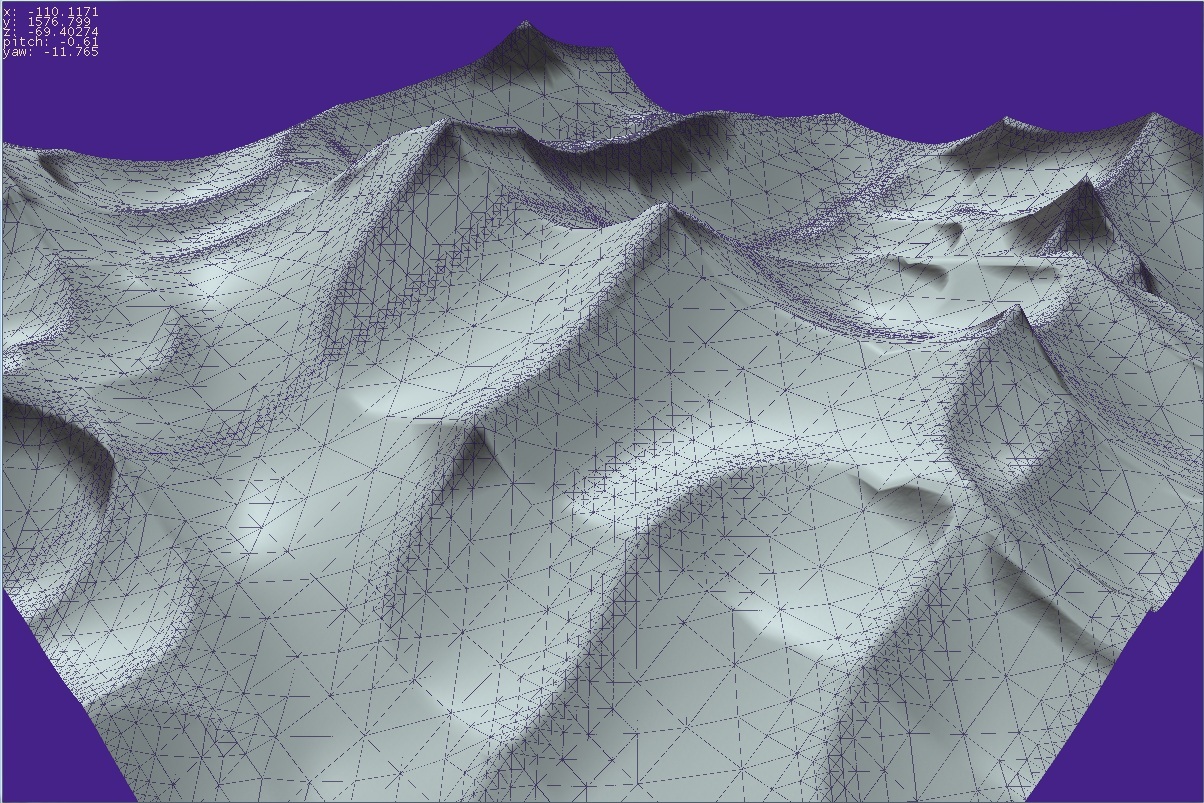

The problem: to generate vast swaths of terrain, and to optimize said terrain to reduce the number of vertexes without heavily impacting the quality of the terrain mesh. The generation aspect of the problem has been tackled by many programmers and math nerds who have developed methods such as perlin noise and ridged fractals. Both of these algorithms are embarrassingly parallel, making them good candidates for parallelization. In OpenCL, you launch a kernel(aka the entry function) with a specified number of worker threads. These worker threads identify themselves using methods like getglobalid(n), where n is the dimension of the id. Generating a mesh of fractal noise is trivial, you can just launch a kernel with a two dimensional array of workers. Set each dimension of the array to how many vertexes wide and long you want the generated mesh to be, and stick the fractal code in the kernel which interprets (xid,yid) as the position of the vertex to be generated.

As simple as this may sound, I ran into a few issues. The kernel was set to stick generated vertexes in an array of float3, but it turns out that internally, float3 is wrapped by a float4. This means that when I read the buffer from the kernel, the vertexes were enumerated as [x,y,z,0,x,y,z,0,x,y,z,0]. Not exactly a huge deal, but it would have saved me a lot of debugging time if the tutorials mentioned it somewhere.

The usual solution to simplifying terrain is to use quadtrees. Most of solutions that I see get very fancy with loads of objects and maintain a large relational structure to define the quadtree. These solutions also tend to be hopelessly slow because of all the cache thrashing they tend to cause, not to mention that the code is a huuuuge bitch to maintain. Since none of those fancy object oriented features are available in OpenCL (thank god), I took an array-based approach where the state of the quadtree is broken down into an array of boolean nodes that specify whether or not a vertex is "activated".

One of the painful issues I ran into was that barrier(), the method used to synchronize worker threads, only works across the work-group. As far as I can gather, there is no way to synchronize threads across an entire kernel execution. This means that every place where I would have needed to add a synchronization across all the worker threads, I have to end that kernel's execution there, then queue another kernel afterwards that contains the code that comes after the barrier. I was kinda upset on discovering this considering that the size of work-groups is heavily restricted, but it wasn't all that bad because the overhead associated with launching a kernel is relatively small.

Now at this point I had an array of vertexes, and an array of boolean values that specifies which of those vertexes are actually important. The final challenge was to create an array of indices that specify the order in which the vertexes are to be drawn. Unfortunately this was a pretty boring problem to solve, and I ended up with a solution full of hard-coding. At this point I had a good set of debugging/compiling tools, so programming the vertex-winder was almost trivial.

In conclusion, I have to say that working with opencl has certainly been an enlightening experience. Massively parallel programming involves a very different approach to solving problems than normal imperative programming. As devices start to come packed with more and more cores, opencl will likely become a more important specification. Being in its infancy, there's still some undocumented driver/compiler bugs you may run into, including the lack of a unified documentation source that doesn't suck, but if you hang in there you'll get the hang of it. I think it would be interesting to see a programming language that could convert sections of one's code to opencl behind the scenes, making the parallel aspect of programming invisible to the end user.

OKAY TIME FOR THE NUMBERS

Parameters: 129*129 vertex mesh @ 6 octaves of noise

Device: C#/CLR Intel(R) Core(TM) i5-3450 CPU @ 3.10GHz

Elapsed Execution Time: 531ms

Device: OpenCL Intel(R) Core(TM) i5-3450 CPU @ 3.10GHz

Elapsed Execution Time: 73ms

Device: OpenCL ATI Radeon HD 5850

Elapsed Execution Time: 21msedit: since publishing this post I've been able to get gpu elapsed execution time down to 5ms (!)

Tools

Dropping into opencl head-first is a blast. Virtually none of the tutorials out there talk about resources and tools to help you write and debug opencl code, which makes opencl look like a black box from which you can't debug or get worthwhile compiler feedback. Your GPU's vendor writes the opencl compiler for their specific platform, which means a failed compilation gets you useful errors like COMPILATION_FAILED. To fix this issue, I found several tools including CMSoft's OpenCL Code Checker, and the much more useful Intel SDK Kernel Builder. In terms of debugging, you will encounter the same black-box issue. AMD released a tool called ocl-emu, a visual studio project that has macros and bindings that lets you -kinda- write opencl code in a C++ environment. Unfortunately opencl's syntax doesn't translate directly into ocl-emu's idea of opencl syntax, and I found the program to be lacking in documentation. In addition, many opencl functions were not implemented verbatim which made using the tool a complete hassle. On the other hand we have Intel's SDK which provided some really neat toys. There's a Visual Studio plugin that lets you open your CL source file in VS, and place breakpoints and add watches to variables as if the code was native, kinda like AMD's setup but it works without having to "convert" code or write scripts to generate the parameters for your kernels. I was also surprised to find out that the SDK's Kernel Builder program also included a fairly comprehensive set of profiling tools. Honestly, if I hadn't discovered Intel's SDK I probably wouldn't have been able to finish this project. If you plan on doing any kind of heavy opencl programming in the future, it is a must have.